Thank you for the details. I think I now understand the case much better, and it's actually in line with our long-term vision for the analytical part of EasyMorph:

Explorer View

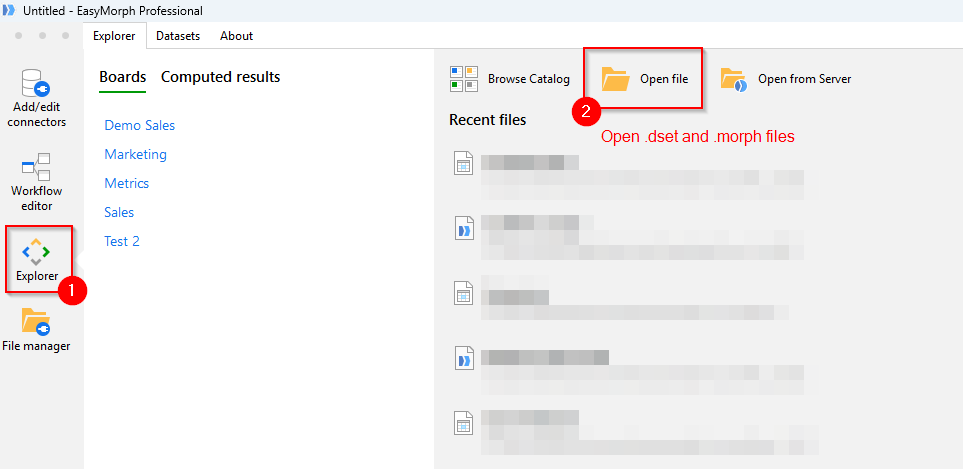

EasyMorph projects will get a new feature for which I still don't have a name (maybe someone from the readers can suggest it). I call it Explorer View, but it's because I have to call it somehow.

The Explorer View will live in a new menu tab in the Workflow Editor, and it will have two components - a base data model and its Analysis View.

The base model consists of one or more tables picked from the workflow (including their charts). Let's call them base tables. The base tables may or may not be linked by primary/foreign keys. If two base tables are linked, filtering data in one table will automatically filter related data in another base table.

The Analysis View of the base model will include the base table(s) and their charts.

Computed or pre-computed Explorer View

Just like currently you can save a table into a .dset file and it will contain the table's Analysis View, it will be possible to export a project's Explorer View into a .dset file as well. In this case, the .dset file will contain multiple tables and multiple charts, which is very similar to what you described.

So a .dset file will play the role of a pre-computed Explorer View.

You can think of Explorer View as a superset of the current Analysis View.

Should I call it Super-analysis View? Or maybe Business Analysis View? IDK.

Should I call it Super-analysis View? Or maybe Business Analysis View? IDK.

As it is currently, it will be possible to open .dset files in CSViewer or in Explorer of Desktop and Server. Hence the name "Explorer View".

However, the workflow itself will become a dynamically computed Explorer View. If you will open a .morph project in Explorer in Desktop, it will prompt for parameters, run, and present its Explorer View.

Actually, this already works in a simpler form - you can already open a .morph file in Explorer in Desktop, and it will prompt for parameters, run, and display the Analysis View of its result table

.

To wrap up:

- Pre-computed Explorer View is a .dset file (can be opened in CSViewer or in Explorer in Desktop/Server)

- Dynamically computed Explorer View is a .morph file (can be opened only in Explorer in Desktop/Server)

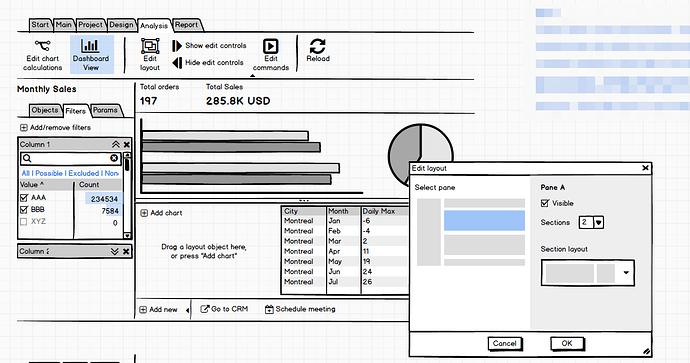

Dashboard mode

The Analysis/Explorer View will get a new mode - the dashboard mode. So, there will be two modes - the analysis mode (which already exists) and the dashboard mode. Both modes are based on the same base model and operate with the same charts but in a different way. The analysis mode is what you know currently as Analysis View - a table, filters, and charts.

The dashboard mode will look like a typical managerial dashboard - filters, aggregated metrics, and charts. Except, it will have a rather rigid layout with minimalistic customization options.

We think a dashboard mode is necessary because managers look at and work with data differently than analysts. Dashboards have never been a good tool for data analysis, but they are well-understood by decision-makers.

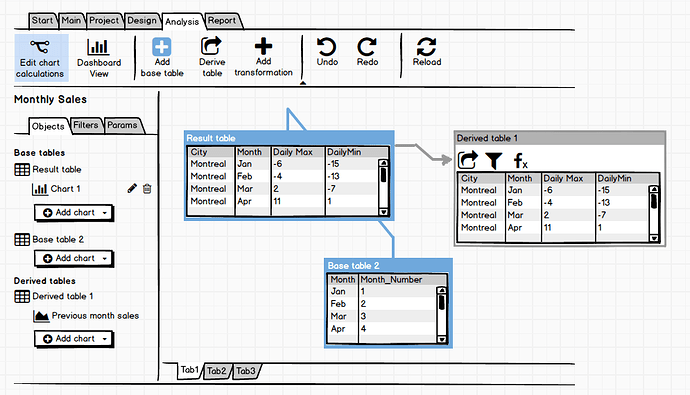

Analytical Engine

Currently, chart calculations are not very flexible. While charts in Analysis View are good enough for technical data analysis, they are not good for business data analysis that requires more advanced calculation logic.

Therefore, we will be adding a second, analytical, calculation engine for chart calculations. It will use absolutely the same design principle as the main workflow engine, but with a limited set of actions (basically only those from the "Transform" category) + derived tables. The starting point for the engine will be the base model.

All in all, we understand that data needs to be presented and analyzed for managerial purposes, and we have a vision of how to address that.

I don't have an exact timeline for all those features, but some of them will definitely appear in version 6 this year. The unification of tables and charts in v5.9 not only simplified things but also was a necessary preparation step for the new analytical features.

Thoughts?