I need to copy files from a HTTPS site to a local folder (preserving the file names).

I’ve read about using PowerShell, but it’s above my paygrade!

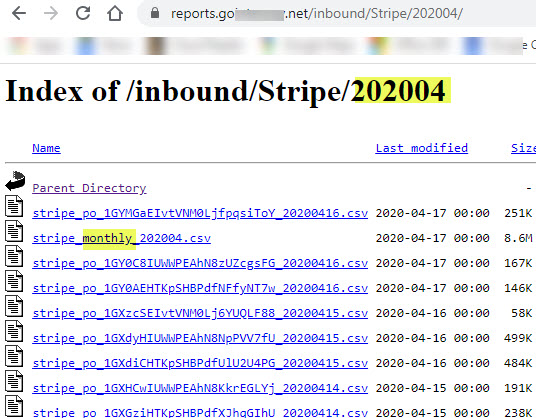

csv files gets dropped into subfolders for each month e.g.

https://reports.abc.net/inbound/Stripe/202004/

I need to copy some files from that directory to a local folder e.g. C:\Stripe

I don’t want to copy all the files, only ones that look like: ??remittance??.csv

The “Download File” action requires me to know the name of the file and to give a name and path where to store it. But I don’t know the file names beforehand and there are hundreds of files every month.

Basically I just want to move the files from HTTPS to local folder so I can then use “Load file” action on them.

I’d appreciate it if someone with Powershell knowledge can give me some sample code!

Hi Hendrik,

how do you typically obtain a list of files to download? Is it an HTML page with a list of files or something else?

Also, I suppose PowerShell won’t be required here. It should be possible to do using regular EasyMorph actions and iterations.

The files are downloaded manually by a person. It takes days!

She visits a webpage, finds the right folder and then visually finds the files for the month and click each one to download it. She needs to enter a username and password on the first download.

We have 13 folders to visit.

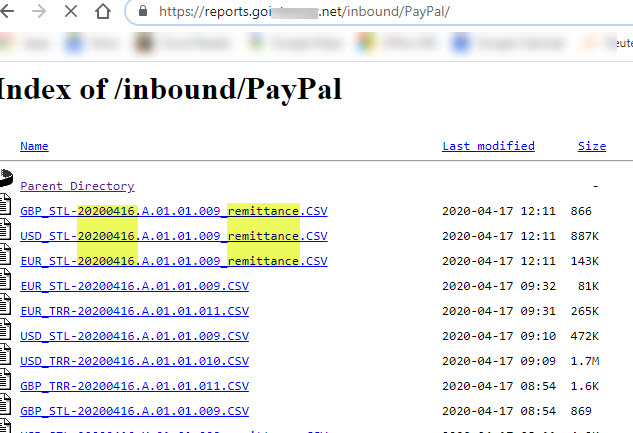

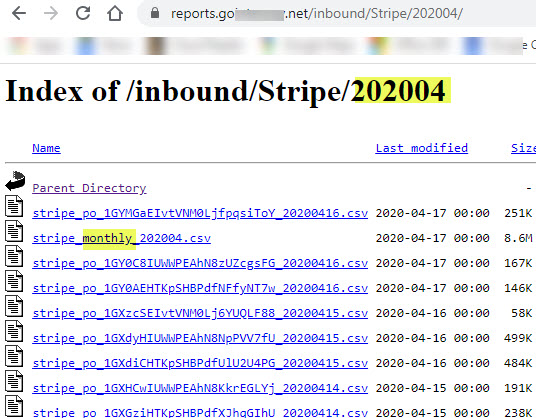

Some organize the files by month (See Stripe below - the 202004 denotes the month). There she downloads all files EXCEPT ones with “monthly” in the file name.

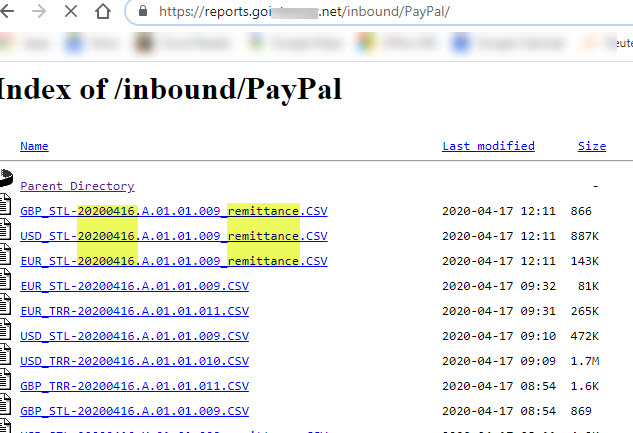

Others have all files in one folder (thousands of files See Paypal below). She picks ONLY the filenames that have “remittance” in the name and fall within the month (date denoted by 2020416 in the file name).

Ideally, the process would have the following actions:

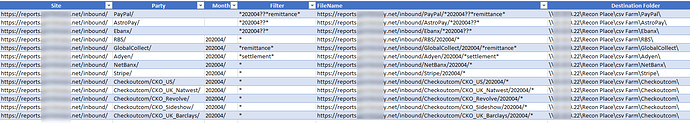

- Import Excel file with pathnames and filename filters to use as parameters in EM.

Something like this.

-

Iterate through this list, use Filename column to determine which files to download.

-

Get a list of files in the folder. Filter in EM

-

Iterate through the filtered list, copy the file to the “Destination Folder”

Thanks for the help.

See the example below that obtains a list of files from a web page.

get list of files.morph (3.3 KB)

The project uses the “Web request” action to save an HTML page, then import it as a text file (no delimiter, quoting ignored, no column names) and extract file names using regular EasyMorph actions.

Logging in using a username and password in EasyMorph depends on the way the website authentication works. If it’s the Basic HTTP authentication, then you can specify it right in the Web Location connector used in the “Web Request” action.

The project gives you an idea how to obtain lists of files for different locations, then filter them to keep only the ones you need to download.

Downloading a file can be done the same way using the “Web request” action, or using the “Download file” action. “Web request” requires a path relative to the one specified in the Web Location connector. “Download file” requires a full path.

Use iterations to download each file from the filtered list.

Once you set it up, I believe you will be able to reduce days of work to a few minutes