Hi, using Premium Power BI as main BI/dashboard tool. EM much better than Power Query because it’s way faster (even with Premium capacity).

Using an ODBC connector with whitelisted IP to a remote Redshift DB and downloading huge CSVs (+100M rows). Doing all transforms in locally in EM. Works great. Then need to assemble tables in a complex schema in Power BI desktop then load DM and dashboards to premium services cloud. So what is best practice?

-

Exporting CSVs for subsequent import to PBI is super inefficient. Takes forever to load, save, refresh, etc. But it works …

-

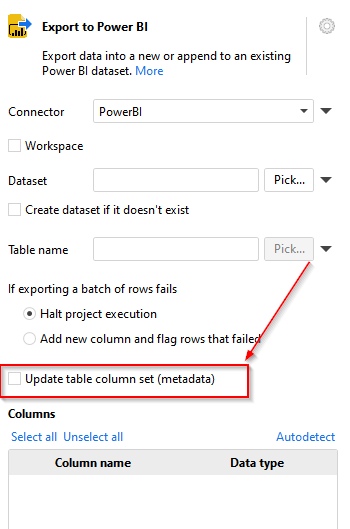

Exporting to PBI dataset works well (fast load, no errors) but if I take this path I can only load table into into a dataset and then only load one dataset into a PBI model, unless I have read the latest posts on this incorrectly. For some weird reason, if I load an EM dataset into a PBI model, that’s all i can load. Can’t even open get data or transform window. Frustrating.

-

Exporting to a local SQL Server database. This works much better when data is finally loaded in PBI but it is very cumbersome (e.g., need to recreate each column in each table in SQL Server, correct?) and seems like a long walk for a short drink.

Am I missing something? Are there other options? Any insights greatly appreciated.