Dear all,

I manged to get so far that I can move on to step 3 for my “real problem”-testing-projects.

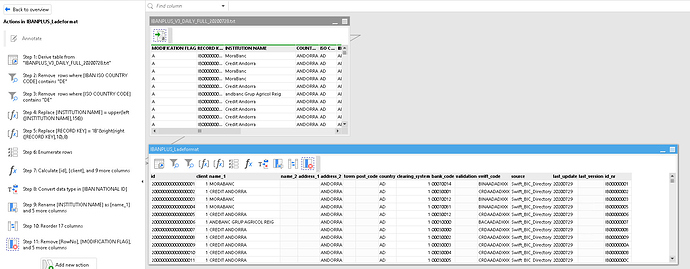

What is done so far:

- I select needed files from a “List of files” to load them to a second module in the project.

- There I tweak the data as needed until I get to a table which looks exactly like the planned destination table on SQL-Server.

What is missing:

- Delete old data from the destination table on SQL-Server and insert the new data from EasyMorph.

This is where I wonder what would be the best way to do this step 3 “Delete old data from SQL-Server and insert new data” to my project. And as well: Where to add this steps exactly? (Do I need to add them just to the table I will finally load to SQL-Server? Would it possible to seperate that “delete and insert” to another module in the same project [but then how to connect it to the final table]?)

My final tables (different projects) have the following sizes:

IBANPLUS: 17 x 170.000

BLZVZ: 17 x 3.500

Example:

Should I use already the more complex BULK LOAD feature of SQL-Server? (New questions: Where to save the temporary CSV-file? and How to use that temp-CSV-File back in the BULK LOAD?

Best regards,

Patrick

It depends on what do you mean by "deleting old data". If you mean "delete rows, but keep the field names and types" then you can use the "Database command" action with the "Trim table" command.

If you need to entirely delete the database table and re-create with new field names and data types, then you can use the "Database command" action with commands "Delete table" and "Create table".

If by "deleting" you actually mean "updating" the table and replacing old values with new ones, then you may need to use the "Update database table" action.

Also, I would suggest saving the old data in a temporary file before deleting it, just in case something goes wrong. You can do this in a separate table by using the "Import from database" action, and then "Export to dataset" to save the data into a temporary file. Then use the "Synchronize action" in the main table to wait until the export is complete. When replacing old data with the new one is finished successfully, you can delete the temporary file using the "File command" action.

You can add these steps right in the main table.

You can surely put these actions into a separate module, and then use the "Call" action to run it. However, you will need to pass the dataset into the called module using the "Input" action which is a bit advanced technique and may be difficult to figure out for a new user.

Hmm, I don't think so. 170K rows is not that many. It will probably take a few minutes to export using the regular "Export to database" action, but shouldn't be too long. Maybe around 3-5 minutes. Set the batch size to max (1000 rows per query) in the action for faster export.

Note that in the next version we will be introducing a new action for bulk loading data into SQL Server. It will export data faster, so you will be able to update your projects to use the new action. For now, the regular export action would work fine, just not too fast.