Hi,

I would like to export data to multiple tables in a database.

If all tables kan be exported, then, they can be written to the database. If there is one or more errors in one of the exports, all other exports have to fail as well.

Is there a way of achieving this in EasyMorph ?

Maybe something like:

- Action database command > start a transaction and create a savepoint

- Iterating over list of CSV-file to export to the database

- After iteration, figuring out if the data has been correctly exported tot the database ==> how to do this check?

- If export was successful > Action database command > end the transaction and release the savepoint

- If the export was not successful > Action database command > rollback to the savepoint

Kind regards

Nikolaas

Hi Nikolaas,

There is no built-in way to achieve this in EasyMorph.

Since Export to DB starts it’s own transaction, your approach may work only is your DB support nested transactions. Also, it’s possible that there will be some other problems with this approach which I can’t foresee without trying it first.

As for figuring out if the data has been correctly exported - you should create one main project and two subprojects. The main project should implement all the 5 steps of your workflow. At step 3 it should use “Call another project” action with “Capture errors and continue” mode. Then you should use “Derive table” action with “On condition” mode to either run step 4 or step 5 depending on the result of step 3.

The first subproject, called at step 3, should use “Iterate” action with “When an iteration fails: Halt project” mode to all second subproject which should execute the export itself. “Export to DB” action should be configured as follows:

- When value doesn’t match column type: Fail batch.

- When exporting a batch fails: Halt execution.

This way your iteration and export will fail on the first error and “Call another project” action in the main project will catch that error right away.

Regards,

Andrew

Hi Andrew,

I have been testing the load process a little more.

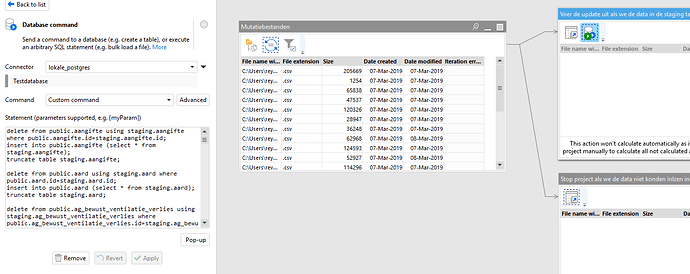

Now I have worked out the following solutions

- I create two schema’s in the postgres database and I execute the same DDL statements so that both are identical. One is de database and the oher is a staging area.

- I load the data with modified or new records in the staging tables. I do this with an iteration in EasyMorph and I collect errors.

- If there were errors, the flow stops.

- It there were no errors I execute one big SQL-statement that I pasted in the database command action and that removes matching database rows (if rows already existed) and inserts the data from the staging tables).

My question is the following:

If I execute the database command action: does It work like a transaction ? If for some reason there goes something wrong: are all SQL-statements rolled back ? I have the impression that it does.

So normally I should not run into an issue with having only parts of my databse updated and others not ?

I suppose I do not need to provide the BEGIN and COMMIT statement in the SQL if I use the database command (if it is already a transaction)?

Hi Nikolaas,

It depends on a target database and connector settings.

For “native” PostgreSQL connector it seems to be the case. But it’s better to just test that case with your actual database settings and connection settings.

Also, have in mind that in other databases some of the statements (usually DDL commands) may implicitly current transaction. But in PostgreSQL most of DDL commands are transaction-safe, as far as I know.

Nikolaas, as for starting a transaction in one “Database command” action and committing or rolling back that transaction in other “Database command” action - it seems that transactions, started in “Database command”, are committed or rolled back by a database when session end (i.e. “Database command” action completes). But there may be a way to share transaction between sessions in some databases.

Hi Andrew,

I have done some testing. When we paste a series of DML commands in the database action it seems that indeed it is executing that in all or nothing fashion.

Kind regards

Nikolaas